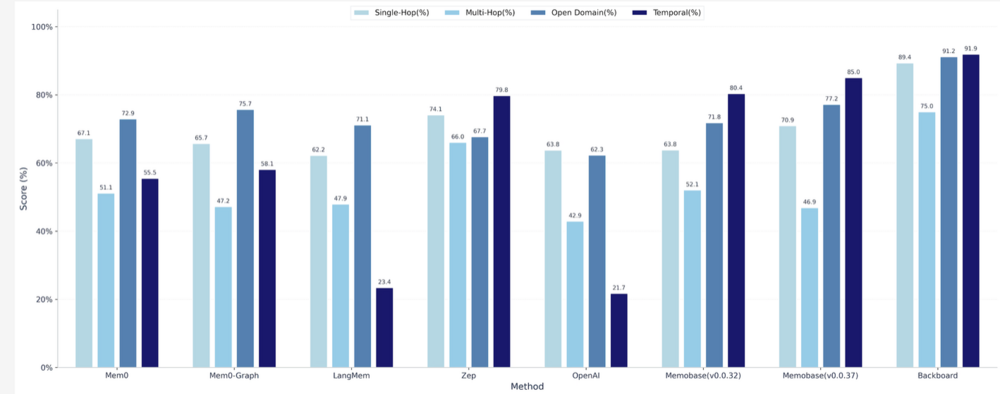

A funny thing happened on the way to baseline our novel AI Memory architecture by using the industry standard benchmark, LoCoMo: We broke the record. And we did it with no gaming, no adjustments, just pure, reproducible execution!

Backboard scored 90.1 percent overall accuracy using the standard task set and GPT-4.1 as the LLM judge. Full results, category breakdowns, and latency are available below, along with a one-click script and API so anyone can replicate the run. This is now LIVE in our API so anyone can plug in and start testing.

Full Result set with replication scripts here: https://github.com/Backboard-io/Backboard-Locomo-Benchmark

LoCoMo was designed to test memory across many sessions, long dialogues, and time-dependent questions. It is widely used to evaluate whether systems truly remember and reason over long horizons. snap-research.github.io+2arxiv.org+2

Recent public writeups place leading memory libraries around 67 to 69 percent on LoCoMo, and a simple Letta filesystem baseline around 74 percent. Backboard’s 90.1 percent suggests a material step forward for long-term conversational memory. We will maintain a live comparison table on our results page.

- Same dataset and task set as LoCoMo

- GPT-4.1 LLM judge with fixed prompts and seed

- Logs, prompts, and verdicts published for every question

Build with Backboard today. Sign up takes under a minute.

LoCoMo benchmark overview and paper. snap-research.github.io+2arxiv.org+2